Then, when I re-ran the COPY command, it successfully loaded data from Amazon S3.īottom line: It worked for me. I then created a VPC Endpoint for Amazon S3 and connected it to the private subnet with a "Full Access" policy. When I ran the COPY command, it predictably hung because I did not configure a means for the Redshift cluster to access Amazon S3 via the VPC. To mirror your situation, I launched a different Redshift cluster with Enhanced VPC routing enabled. Second test: Using Enhanced VPC Networking If the data was coming from a different region, it would still be encrypted because communication with Amazon S3 would be via HTTPS. If the data is being loaded from Amazon S3 in the same Region, then the traffic would stay wholly within the AWS network. The Redshift cluster has its own special way to connect to S3, seemingly via a Redshift 'backend'. Therefore, a VPC Endpoint/NAT Gateway is not required to perform a COPY command from Redshift. This worked successfully, with a message of: INFO: Load into table 'foo' completed, 4 record(s) loaded successfully.

#Aborted redshift copy command from s3 code

I'd like to modify the below COPY command to change the file naming in the S3 directory dynamically so I won't have to hard code the Month Name and YYYY and batch number. File names follow a standard naming convention as 'filelabelMonthNameYYYYBatch01.CSV'. Established a connection to the Redshift cluster via psql on the EC2 instance ( psql -h xx.yy. -p 5439 -U username) I have job in Redshift that is responsible for pulling 6 files every month from S3.

#Aborted redshift copy command from s3 install

Ran sudo yum install postgresql on the EC2 instance.Launched an Amazon EC2 Linux instance in the public subnet.

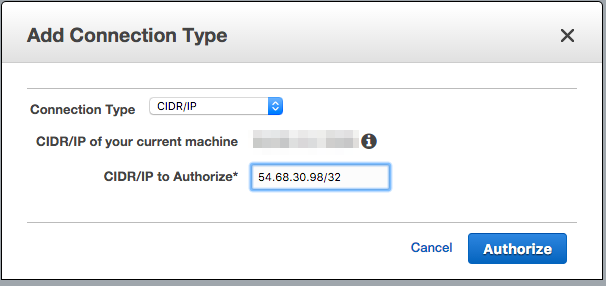

Launched a 1-node Amazon Redshift cluster in the private subnet.Created a new VPC with a Public Subnet and a Private Subnet (no NAT Gateway).To reproduce your situation, I did the following: The security group for redshift cluster allow all conection to port 5439 Type Protocol Port Range Source Description There is no restricitve policies on my bucket and public and private subnet security groups and I already can run SQL queries on my redshift cluster in a private subnet. However, I can not run the copy query from my s3 bucket copy venue I need to upload some file from s3 to redshift as well, so I set up a s3 gateway in my private subnet and updated the route table for my private subnet to add the required route as follow: Destination Target Status Propagated I can successfully connect to my redshift cluster and do basic SQL queries through DBeaver. I have set up a redshift cluster in a private subnet.

0 kommentar(er)

0 kommentar(er)